Automating Regression Testing: automating regression testing strategy and tools

- Expeed software

- 2 days ago

- 16 min read

Automating regression testing isn't just about catching bugs. It's a strategic safety net for your entire application. Think of it this way: every time your team ships new code, they’re making changes to a complex system. Regression testing confirms those new updates didn't accidentally break something that was already working perfectly.

It’s about shipping new features with absolute confidence, knowing the foundation of your product is solid.

Why Automating Regression Testing Is a Strategic Imperative

For CTOs and engineering leaders, manual regression testing is more than just slow—it's a bottleneck that stifles innovation and puts your brand reputation on the line. Every new feature or fix introduces the risk of an unintended side effect, or "regression," where a core function suddenly fails.

Imagine an e-commerce platform rolls out a slick new discount code feature. If your team has to manually re-test every critical workflow—login, search, add to cart, checkout—it’s not just tedious, it’s a huge risk. Human error is inevitable.

What if that new code silently breaks the payment gateway? You’re not just dealing with a bug; you’re losing revenue and customer trust with every single failed transaction. This is exactly why automation is no longer optional.

Shifting from Tactical Chore to Strategic Advantage

Viewing regression testing as just another QA task completely misses the point. A well-built automation strategy de-risks your releases, protects revenue streams, and—crucially—frees up your best engineers from soul-crushing, repetitive work.

Instead of mindlessly clicking through the same test cases sprint after sprint, they can focus on solving genuinely hard problems and building what’s next.

A strong regression automation suite gives developers the confidence to refactor code and innovate without the fear of causing a system-wide failure. It transforms quality assurance from a gatekeeper into an enabler of speed and agility.

The market data tells the same story. The global software testing market is set to explode from $55.8 billion in 2024 to a staggering $112.5 billion by 2034. The engine behind that growth? Automation. That segment alone is projected to jump from $28.1 billion in 2023 to $55.2 billion by 2028, as highlighted in a recent QA trends report.

To get a better sense of how these two approaches stack up, here’s a quick comparison.

Manual vs Automated Regression Testing At a Glance

Aspect | Manual Regression Testing | Automated Regression Testing |

|---|---|---|

Speed | Extremely slow; dependent on human pace. | Extremely fast; tests run in minutes, not days. |

Scalability | Poor. Adding more tests requires more people and time. | Excellent. Scales easily with the codebase and CI/CD pipelines. |

Cost | High long-term operational cost (engineer salaries). | Higher initial investment, but significantly lower long-term cost. |

Consistency & Scope | Prone to human error; scope is often limited due to time. | Highly consistent and reliable; enables broad test coverage. |

Developer Feedback | Slow feedback loop, often days after code is written. | Immediate feedback, often within minutes of a code commit. |

Team Morale | Can lead to burnout and frustration from repetitive tasks. | Frees engineers for high-value, creative work, boosting morale. |

The table makes it clear: while manual testing has its place, relying on it for regression is a recipe for falling behind. Automation is the only viable path forward for teams that want to move fast without breaking things.

Conducting a Baseline Audit of Your Current Process

Before you can build a business case for automation, you need to know exactly where the manual process is bleeding you dry. A quick baseline audit will give you the hard data you need to highlight the pain points and quantify the opportunity cost.

Start by digging into these questions:

How much time does a full manual regression cycle actually take? Don't guess. Calculate the total engineer-hours spent on this hamster wheel before every single release.

How many critical bugs have slipped into production in the last quarter? Trace them back. Would a consistent, automated regression test have caught them? Be honest.

Which features are tied directly to revenue? These are your crown jewels and your highest-priority candidates for automation. A failure here hits the bottom line.

What’s the developer sentiment? Are your engineers energized or exhausted by the current process? Tedious manual testing is a hidden driver of burnout and attrition.

If you need a refresher, our guide on what is quality assurance in software development can help frame the fundamentals for your team.

This audit provides the ammunition you need to frame automation correctly: not as a cost center, but as a direct investment in speed, quality, and engineer retention. It sets the stage for defining clear, business-aligned KPIs for your initiative, like accelerating feature delivery and protecting your brand.

The long-term payoff is undeniable, but getting there requires specialized talent. Finding engineers who can build and maintain scalable, reliable automation frameworks is a massive challenge.

Choosing Your Automation Tools and Frameworks

Picking the right automation toolkit is one of those foundational decisions that will either set your testing strategy up for success or condemn it to a slow death. This isn't just about grabbing a tool with the longest feature list. It's a strategic choice that directly impacts your total cost of ownership, the skills you need on your team, and how well testing integrates with everything else.

Get this right, and automation becomes an accelerator. Get it wrong, and you'll be drowning in technical debt before you know it.

The market is flooded with options, from powerful open-source darlings like Selenium and Playwright to massive, do-it-all enterprise platforms. Each comes with serious trade-offs that every engineering leader needs to weigh.

Open Source vs. Enterprise Platforms

Open-source tools like Selenium, Cypress, and Playwright are wildly popular for a reason. They offer incredible flexibility and are propped up by huge, active communities. The catch? They demand a high level of technical skill to actually stand up and maintain. You aren't just adopting a tool; you're building a custom framework around it, and that requires a team of sharp SDETs (Software Development Engineers in Test).

Enterprise platforms, on the other hand, promise an all-in-one solution with built-in reporting, test management, and a support line you can actually call. This can lower the initial technical hurdle, but it comes with a hefty licensing fee and the risk of vendor lock-in.

The real decision isn't just about the tool—it's about the team you have, or the team you need to build. An open-source strategy means investing in high-end engineers. An enterprise solution shifts that investment into licensing fees and vendor support contracts.

As you evaluate your options, it's worth reviewing the top automated website testing tools to see what developers are actually using in the field.

Matching Tools to Your Architecture

A one-size-fits-all approach to tooling is a recipe for disaster. Your choice has to be anchored in the reality of your application's architecture.

Let’s look at a couple of real-world scenarios:

Modern Microservices Architecture: If your app is a distributed system of microservices, a purely UI-focused tool like Cypress won't cut it. You'll need something that’s API-first, like Postman, or a framework that handles both API and UI testing gracefully, like Playwright. This lets you test services in isolation before you ever touch the end-to-end user flow.

Legacy Monolithic Application: For a big, aging monolith with a brittle front-end, stability is everything. In this case, a battle-tested tool known for its robust cross-browser support, like Selenium, is often the smarter, more reliable choice. It's built to handle the quirks of older tech stacks.

The Build vs. Buy Framework Debate

Beyond the tool itself is the framework. Do you build a custom one from scratch or buy something pre-packaged? Building gives you total control to tailor every single detail to your exact needs. But make no mistake—it's a massive undertaking that demands serious upfront investment and continuous maintenance.

Buying a framework, or using the one baked into an enterprise platform, gets you moving much faster. The trade-off is rigidity. You're stuck with the vendor's design choices, which might not align with where you want to go in two or three years.

Often, a hybrid approach works best. Start with a solid open-source foundation like Playwright but build your own modular, reusable components and libraries on top. You get the flexibility of open source with the structure of a well-designed framework. This is how you create a test suite that can actually be maintained as the application evolves.

Ultimately, the right answer comes down to your team's skills, your budget, and your architectural reality. The fanciest tool in the world is useless without the engineering talent to wield it. Finding engineers who can navigate these tooling decisions and build resilient automation is the real challenge.

Designing High-Impact Test Cases for Maximum ROI

One of the easiest ways to kill a regression automation project is trying to automate everything. I've seen it happen time and again. Teams get caught up in the idea of total coverage, and they end up with a slow, brittle, and wildly expensive test suite that delivers nothing but headaches. This “boil the ocean” approach is a recipe for failure.

The smart money isn't on quantity; it’s on quality and business impact.

True ROI comes from a risk-based approach. Instead of asking, "What can we automate?" the real question is, "What must we automate to protect the business?" This simple shift in mindset moves you away from chasing vanity metrics like 100% test coverage and toward shielding your most critical user journeys and revenue streams.

Pinpointing Your Most Critical User Journeys

Before you write a single line of test code, you have to know what actually matters. What are the absolute, non-negotiable workflows that, if they broke, would cause a five-alarm fire? Think direct revenue loss, catastrophic data corruption, or severe brand damage.

Get practical about what keeps your business running:

E-commerce: Can a user find a product, add it to their cart, and actually buy it? That’s the whole ballgame.

SaaS Platform: Can a new user sign up, log in, and use the core feature they’re paying for? If not, you have a churn problem, not a bug.

Fintech App: Can a user check their balance and make a transfer? In finance, trust is everything, and broken core features shatter it instantly.

These high-value paths are where you start. A bug in a rarely-used settings page is an annoyance. A failure in the checkout flow is an existential threat. Prioritize accordingly.

Layering Tests for Fast Feedback

Not all regression tests are created equal, and they definitely shouldn't all run at the same time. The key to getting fast, actionable feedback without grinding development to a halt is to layer your tests strategically within your CI/CD pipeline.

A mature automation strategy gives you feedback at different speeds. You need quick smoke tests for an immediate "go/no-go" signal on every commit, and a comprehensive regression suite for deep validation that runs less often. The goal is to catch regressions as early as possible with the least amount of friction.

Think of it as a tiered defense system:

Smoke Tests: This is your tripwire. A tiny set of 5-10 tests that run on every single commit. They verify the absolute basics—like the app starting up or a user logging in—and should finish in under two minutes. If they fail, the build is broken. Period.

Sanity Tests: A slightly bigger suite that runs after a successful build. It’s a quick health check to ensure major features haven't regressed after new code was merged.

Full Regression Suite: This is the big one. It’s your comprehensive safety net covering a wide range of scenarios. Because it’s slow, you don’t run it on every commit. Instead, schedule it for a nightly run or gate it before a major release.

This layered model means developers get instant feedback where they need it most, while the heavier suites provide deep validation without becoming a bottleneck.

Writing Stable and Maintainable Tests with Design Patterns

Once you know what to test, how you write those tests will determine their long-term value. Nothing is more frustrating than a test suite full of brittle tests that break with every minor UI change. They become noise, and eventually, everyone starts ignoring them.

The secret to durable automation is using solid design patterns to separate your test logic from the nitty-gritty details of your application's UI.

The Page Object Model (POM) is the industry standard for a reason: it works. By using POM, you insulate your tests from the ever-changing front end. Instead of scattering UI locators (like CSS selectors and XPaths) all over your test scripts, you centralize them in dedicated "Page Object" classes. Each class represents a page or a major component.

So when a developer changes a button's ID on the login screen, you update it in just one place—the object—not in 50 different test scripts. This simple practice dramatically cuts down on maintenance and keeps your test suite a reliable signal of quality, not a source of constant rework.

Building a high-impact, maintainable test suite requires not just the right strategy, but the right talent. Finding engineers who understand risk-based prioritization and can implement robust design patterns is critical for success.

Integrating Automation into Your CI/CD Pipeline

Having a solid suite of automated regression tests is a great start. But the real magic happens when those tests become a completely invisible, seamless part of your daily development workflow.

The goal isn't to create another gate for developers to pass through. It's to make quality a background process, something that just happens. This is precisely where Continuous Integration and Continuous Deployment (CI/CD) pipelines come in.

By weaving your regression suite directly into the pipeline, you shift testing from a clunky, manual stage to an automated quality check that runs against every single code change. This gives developers the near-instant feedback they need to code with speed and confidence.

Triggering Tests for Instant Feedback

The heart of CI/CD integration is running the right tests at the right time. Your pipeline orchestrator—whether it’s Jenkins, GitLab CI, GitHub Actions, or something else—becomes the central nervous system for your quality process.

You can set up specific triggers to automatically kick off different parts of your test suite, ensuring every pull request or merge is validated before it ever pollutes the main branch.

A battle-tested setup usually looks something like this:

On Every Pull Request: Run a lightweight set of smoke tests. This gives an immediate "go/no-go" signal, confirming the new code hasn't completely broken the build.

On Merge to Main/Develop: Trigger a more substantial suite of sanity tests. This check ensures the newly integrated code plays nice with everything else.

Nightly Builds: Kick off the full regression suite. Since this can be time-consuming, running it overnight against the latest build provides an exhaustive check without bogging down the team during the day.

This tiered approach creates a perfect balance between feedback speed and test depth. For a deeper dive into how this fits into the bigger picture, check out our guide on Agile vs. DevOps.

Slashing Runtimes with Parallel Execution

A full regression suite can easily take hours to run. In a modern CI/CD pipeline, that’s an eternity. If developers have to wait that long for results, they'll just move on to the next task, and the value of your automation plummets.

The answer is parallel execution.

Instead of running tests one by one, you run them simultaneously across multiple virtual machines or containers. A suite that takes two hours to run sequentially can be knocked out in just 10-15 minutes by fanning it out across a dozen environments. This one change can dramatically shrink your feedback loop, making comprehensive testing practical even in the fastest-paced pipelines.

Parallelization isn't a nice-to-have; it's a necessity for any serious automation effort. The whole point is to get comprehensive results back to developers while the code they just wrote is still fresh in their minds.

Overcoming Common Pipeline Hurdles

Just plugging your tests into a pipeline isn’t enough. You’re going to hit some real-world challenges.

One of the biggest is managing test environments. Your automated tests demand a stable, predictable, and production-like environment to run in. This is where containerization tools like Docker are a game-changer, letting you spin up clean, consistent environments for every single test run.

Another classic problem is test flakiness—tests that fail intermittently for no obvious reason. A few flaky tests can destroy the team's trust in the entire automation suite. Tackling this requires discipline: build in smart retries, design tests to be completely independent, and make them resilient to minor environmental hiccups.

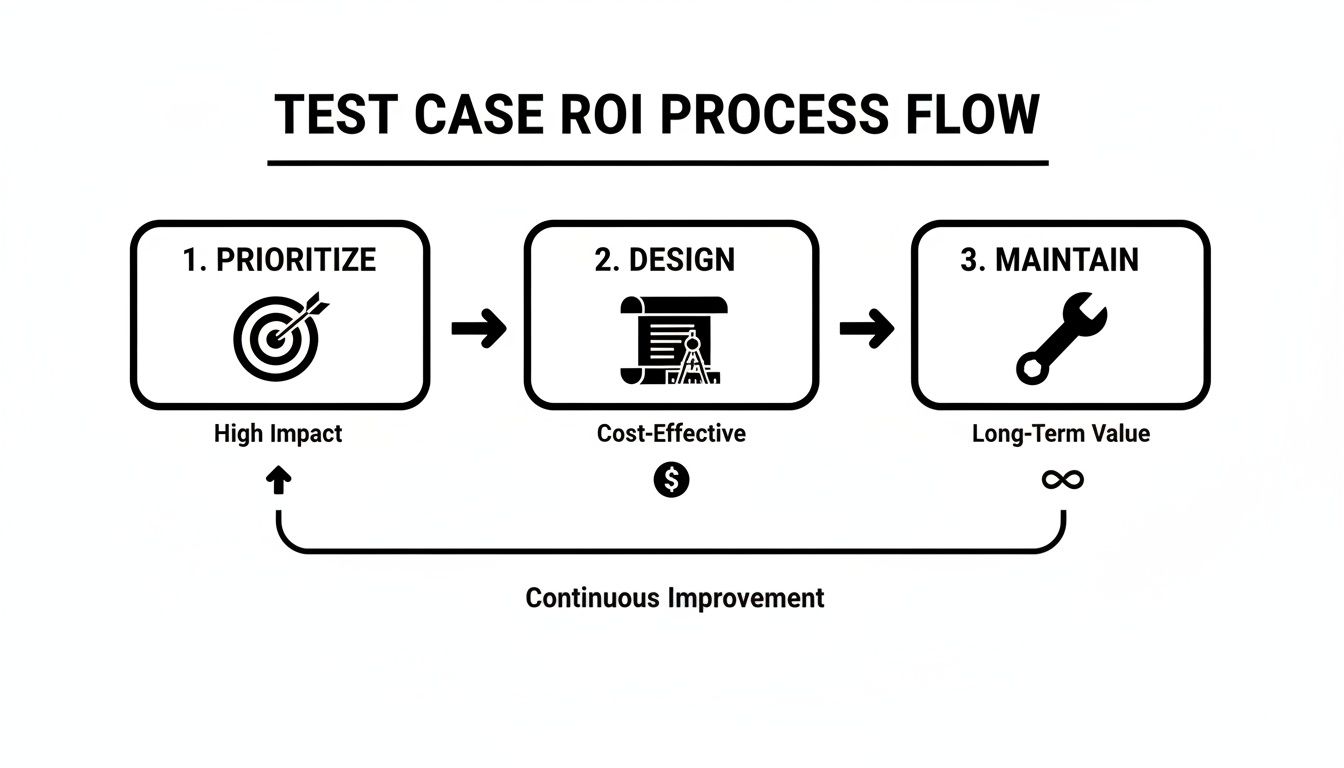

Finally, you need visibility. The process below shows how to think about the entire lifecycle of a test case to maximize its value, from initial prioritization through ongoing maintenance.

This flow underscores a critical point: a test case’s value isn't just in its creation, but in its entire lifecycle of execution and maintenance. It's vital to have dashboards that give every stakeholder a clear, real-time view of release readiness, turning raw test output into actionable intelligence.

Integrating and scaling a regression suite in a CI/CD pipeline requires a specific kind of engineering talent. You don't just need people who can write tests; you need engineers who understand DevOps principles, environment management, and pipeline orchestration. Finding them is tough.

AI's Answer to Brittle Tests: Self-Healing and Predictive Automation

Test maintenance is the silent killer of automation initiatives. You build a beautiful test suite, it gives everyone confidence, and then it slowly decays into a noisy, unreliable mess that the team starts to ignore. The main culprit? Minor UI changes. A button's ID gets tweaked, a form field is renamed, and suddenly dozens of tests are bleeding red.

This constant brittleness forces your best engineers to waste time on tedious script repairs instead of shipping new features. It's a frustrating, reactive cycle. But now, AI is tackling this problem head-on, turning fragile automation into a resilient, self-adapting system.

The Power of Self-Healing Tests

The most immediate win you'll see from AI in regression testing is self-healing tests. Instead of locking onto a single, rigid selector (like a CSS ID), AI-powered tools understand UI elements by looking at multiple attributes—their text, position, color, and relationship to everything else on the page.

So when a developer changes that button ID, a traditional script breaks instantly. An AI-driven test, however, sees the change, recognizes the element it's supposed to be interacting with using those other attributes, and automatically updates the script with the new selector. Often, no human ever has to get involved. This directly attacks the number one cause of test flakiness and maintenance headaches.

Self-healing isn't just about fixing broken locators; it's about making your entire test suite more resilient to the natural evolution of your product. It transforms test maintenance from a constant chore into an occasional review process, freeing your engineers to focus on innovation.

The market is moving fast on this. We're at a point where 89% of organizations expect AI-driven risk analysis to be the foundation of their QA decisions by 2026. In the past, something like 47% of a testing cycle was burned just fixing scripts broken by minor UI changes. With AI, experts project a 45% reduction in that manual effort, paving the way for true continuous testing.

Beyond Fixing: Predictive Automation and Smarter Prioritization

AI is doing more than just fixing what's broken; it's getting predictive. By analyzing data from real user behavior in production, AI can build a clear picture of how customers actually use your application. It pinpoints the most common features, the most critical user journeys, and the areas most likely to cause trouble.

This insight fuels much smarter test prioritization. Instead of running the entire regression suite for every minor code change, an AI-powered system can recommend a small, targeted set of tests covering the highest-risk areas for that specific build. You get faster feedback where it counts.

Identifies High-Traffic Paths: AI can see which workflows (like checkout or user registration) are mission-critical and ensures they get the most rigorous testing.

Predicts Regression Hotspots: By connecting past bugs to specific parts of the codebase, AI can predict where new changes are most likely to introduce regressions.

Optimizes Resource Usage: Running fewer, more intelligent tests in your CI/CD pipeline cuts down on costs and gives developers faster, more relevant feedback.

This concept is part of a bigger trend called AIOps (Artificial Intelligence for IT Operations), where systems learn from historical data to automate responses. An AI testing tool learns to prioritize tests in much the same way. Our article explaining what AI automation is and how it works dives deeper into these mechanisms. Adopting AI isn't just about efficiency; it's about building a quality strategy that can keep up with modern development.

Building Your Elite Automation Team

Let’s be brutally honest. The most sophisticated automation tools and a perfectly crafted strategy are completely worthless without the right people to bring them to life. A brilliant framework will crumble under the weight of poor execution, and a CI/CD pipeline becomes a bottleneck if it’s not in the hands of engineers who actually get its complexities.

Ultimately, the success of your entire regression automation effort comes down to the people behind the keyboard. This is often the hardest piece of the puzzle to solve. Finding engineers who have that rare blend of deep development chops, a quality-first mindset, and genuine strategic vision is a massive challenge for any company.

This is where you gain your real competitive edge—not just with tech, but with talent.

In-House Experts vs. Flexible Staff Augmentation

When it's time to build your team, you've really got two main paths to consider. Neither is universally "right" or "wrong"—it all comes down to what your organization needs, your budget, and how fast you need to move. The best choice is the one that lines up with your business goals.

Cultivating In-House Experts: This is the traditional route—hiring full-time, permanent employees to build and own your automation practice. The upside here is huge. You’re building deep institutional knowledge, fostering long-term ownership, and weaving a culture of quality into the very fabric of your engineering team. The downside? This path is slow and incredibly expensive. The hunt for elite Software Development Engineers in Test (SDETs) can drag on for months, and you're competing against everyone for that top-tier talent.

Leveraging Staff Augmentation: This model gives you incredible flexibility, allowing you to bring in specialized experts on a project or contract basis. It’s the perfect solution when you need to scale up fast for a big release, tackle a specific technical beast like building a new framework from scratch, or inject some fresh, specialized expertise into your team without the long-term overhead of a direct hire.

The modern, winning approach isn’t about choosing one over the other; it’s about blending them. A core in-house team owns the long-term vision and the institutional knowledge, while augmented experts provide the burst capacity and niche skills needed to crush key initiatives and keep things moving at full speed.

This hybrid model truly offers the best of both worlds: the stability of a permanent team combined with the agility to adapt to whatever the project throws at you.

Key Roles for Your Automation Dream Team

A world-class automation team is more than just a few testers who can code. It’s a carefully assembled group of specialists, with each role bringing a unique and critical skill set to the table.

Automation Architect: This is your visionary, your strategic leader. They design the entire overarching framework, select the right tools for the job, and make sure the automation strategy is perfectly aligned with the broader engineering and business goals. They’re always thinking about scalability, maintainability, and the long-term ROI.

Software Development Engineer in Test (SDET): These are your builders—the hands-on implementers. SDETs are skilled developers who live and breathe clean, robust, and maintainable test code. They take the architect’s vision and turn it into reality, building out the test suites and wiring them into the CI/CD pipeline.

AI/ML Test Engineer: A newer, but increasingly critical, role. These specialists are laser-focused on implementing AI-powered testing solutions. They’re the experts in self-healing test frameworks, using predictive analytics to find bugs before they happen, and leveraging machine learning to intelligently optimize which tests get run and when.

Finding people with these skills is a major hurdle. For some actionable advice on this, our ultimate guide to recruiting and hiring software engineers is packed with valuable insights for attracting and closing top-tier technical talent.

Gaining a Competitive Edge with Global Talent

Here's the reality: the talent you need probably isn't in your zip code. The competition for elite automation and AI engineers is global. If you limit your search to your local area, you're putting yourself at a massive disadvantage right from the start.

This is where a strategic staffing partner becomes an absolute game-changer. By tapping into global talent pools, you get access to world-class engineers who bring diverse perspectives and deep expertise—often more cost-effectively than trying to compete in overheated local markets. Whether you're building a team from the ground up or just need to add some specific firepower, having access to the top 1% of engineers worldwide is how you win.

Your automation strategy deserves an elite team to execute it. At TekRecruiter, a premier technology staffing, recruiting, and AI Engineer firm, we specialize in helping innovative companies deploy the top 1% of engineers anywhere. Whether you need to make a critical permanent hire or flexibly augment your team with nearshore experts, we provide the talent to turn your quality vision into a reality. Let us help you build the team that will accelerate your journey to excellence. Learn more about how we can build your team at https://www.tekrecruiter.com.

Comments